Linux kernel keyrings, container isolation and maybe some kerberos

On a recent project I've been stumbling on the case that kerberos tickets have been inadvertently shared across containers on a node - which obviously caught my attention as I'm not keen on sharing such secrets across workloads. This post describes why this happens and what to do to prevent this.

On the last days I've been working - coincidentally - for several projects with linux, kerberos and as most of the time kubernetes. In this case I've been stumbling across the case that kerberos tickets have been inadvertently shared across containers on a node - which obviously caught my attention as I'm not keen on sharing such secrets across workloads.

Keberos and the inadvertently shared cache

Overall the use case has been to enable keberos authentication of containers against a kerberos environment (Microsoft based Active Directory). During testing I noticed that trying to run kinit I've been already presented a password prompt for a given username. Well - the only thing has been: The container I've been working in has been brand new, empty. So I deleted the pod and started again. kinit prompted right away for the password of a given user and klist presented me with a list of tickets.

klist

Ticket cache: KEYRING:persistent:1000:1000

Default principal: mrniceguy@LAB2.LOCAL

Valid starting Expires Service principal

02/01/2023 21:55:23 02/02/2023 07:55:23 krbtgt/LAB2.LOCAL@LAB2.LOCAL

renew until 02/08/2023 21:55:13So I've been checking just where kerberos caches the tickets. As you can read on the output above the ticket cache is KEYRING:persistent:1000:1000 which means it uses some kernel space to cache the tickets.

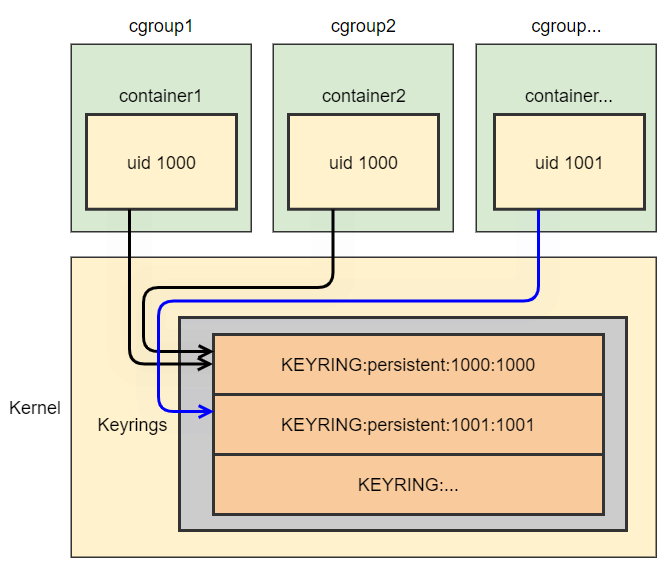

KEYRING is Linux-specific, and uses the kernel keyring support to store credential data in unswappable kernel memory where only the current user should be able to access it.

Logically you might imagine the keyring similar to this:

If you want to read something more about keyrings, check out the man.

This means the cache is shared in kernel

As this situation shows quite subtle is that we can access the same kernel space from several containers. This means, if you have the option to launch pods on a node that utilizes kerberos with keyring you can possibly just alter the runAs uid in your pod spec and access keyrings of other containers.

This is obviously not a day to day scenario but if this happens you want to make sure to avoid this!

Solution 1 - use other cache backends

Kerberos offers a variety of variety of available ticket cache types.

One configuration that uses a file within the container could be:

[libdefaults]

default_ccache_name = FILE:/tmp/krb_%{uid}.keytabThis places the ticket cache into the /tmp directory of your local filesystem (which is hopefully not shared across your pods :-)). You can adjust the path depending on your own needs and even move this onto a persistentVolume in case you need to keep tickets across node changes.

As a reference, this is a full krb5.conf I'm using in the tests.

- it's using DNS for realm lookup (if possible)

- it's using DNS for KDC lookup

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = true

dns_lookup_kdc = true

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

pkinit_anchors = FILE:/etc/pki/tls/certs/ca-bundle.crt

spake_preauth_groups = edwards25519

default_ccache_name = FILE:/tmp/krb_%{uid}.keytab

default_tkt_enctypes = aes256-cts-hmac-sha1-96

default_tgs_enctypes = aes256-cts-hmac-sha1-96

permitted_enctypes = aes256-cts-hmac-sha1-96

allow_weak_crypto = false

#default_realm = LAB2.LOCAL

[realms]

# omit for realms available via dns

# LAB2.LOCAL = {

# }

[domain_realm]

# omit if realm dns lookup works

# .lab2.local = LAB2.LOCAL

# lab2.local = LAB2.LOCAL Solution 2 - strengthen the sandboxing

The fact that containers are not comparible to an isolation like virtual machines is well known - and that's part of the design, they are very lightweight, made for fast startup and so on. So you cannot give every container it's own kernel which would increase the base footprint.

On the other hand this example shows that the isolation between containers is weak and you need to make sure your security requirements are not affected by this.

One viable solution to increase the isolation of your containers is to use a "virtual kernel" per container - something like gVisor offers. gVisor offers an alternative runtimeClass that isolates containers more strictly.

The definition for a runtimeClass using runsc (gVisor) as executor.

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: gvisor

handler: runscAn example for a pod using another runtimeClass looks like this (taken from here):

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpd

labels:

app: httpd

spec:

replicas: 1

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

runtimeClassName: gvisor

containers:

- name: httpd

image: httpdSummary - containers & isolation

It's quite well known that the security boundaries are rather low between containers - despite knowing this I've been quite astonished on how easy it is to "forget" some data in the kernel where other containers can access it. The interesting thing here is that this is not a security problem like a vulnerability - this happens by design.

And this makes it so important to understand that workloads using mechanisms like this one shown here running in loosely isolated environments like containers might have unwanted side effects - just because the concept of containers has never been present at the time when these kernel features have been designed.

So, make sure to understand your workloads on as many layers as possible to take such requirements or risks into design decisions when planning your next environments.