Docker - Hardening with firewalld

Containers are no virtual machines - yet we might want to treat hosts running container workloads like hypervisors and apply limitations on container networking. This guide describes a way to limit container networking on docker based container hosts using firewalld.

Consequent adaption of microservices goes hand in hand with production usage of container applications. Running (multiple) containers on a single host is (from a logical perspective) similar to running a hypervisor which is hosting several virtual machines: A single host is running several distinct application workloads. Running these workloads in several virtual networks (container networks) effectively isolates these services from each other. External access is implemented using exposed ports. In general this works very well and we don't have to get in touch with too much of network configuration when running container workloads. Yet, there is one thing to consider.

Container access to local networks

A container running on docker has full network access by default. This implies accessing remote services on the internet as well as accessing the local network. Just like any other application running on an operating system, a container has no limitation on outgoing network traffic.

From a security perspective we might want to limit this access:

- Containers may get automatically updated (foreign sources)

- Containers may get compromised

Considering the case one of you're containers is compromised it's useful to ensure that the container cannot access local resources.

Isn't this part of the security between hosts?

Yes! Each host in your environment needs to ensure that it cannot be attacked from another host in the same network. Zero-trust!

Why treat containers otherwise?

The issue with containers is that they are running on a host and cannot only access the network, they also can access all endpoints on the host itself.

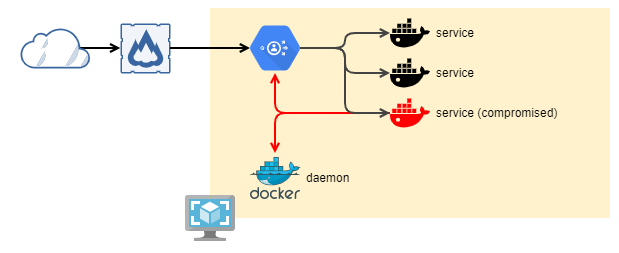

- So, for example, if you are running a reverse proxy that routes incoming requests to different containers, an attacker might use a hijacked container and access a configuration rest-api on that reverse-proxy in order to reconfigure the routing.

- In another scenario docker may expose the client access using a tcp- instead of unix-socket. In this case a container might just access the socket and spawn new containers that in turn with elevated privileges.

- You might also be running some other workloads on your nodes like glusterfs or ceph or even just a NFS share that runs on your internal network and might be accessible from any of your container hosts.

The attack vector looks somehow like this:

Limiting network access

Regarding the described situations above we would like to ensure that services running within our containers cannot communicate with local resources. As most microservices don't access local resources at all, we are going to prevent communication to the container host as well as to the local network.

Our implementation makes use of firewall-cmd which in turn utilizes iptables/nftables for actual filtering.

Requirements

You need to install firewalld unless it's already installed. On debian/ubuntu based systems ufw might already be installed. ufw is also capable of implementing the solution - after all we're just implementing some iptables/nftables rules. The administration using firewall-cmd provided by firewalld is just easier and avoids fiddling with configuration files.

Configuration

Applying the restrictions is done using a set of commands, shown below.

These commands will to the following:

- create several chains

- redirect outbound traffic from containers if targeting

loopbackinterface - redirect outbound traffic from containers if targeting

eth*interface - dropped packets will be logged (with rate limiting)

The script is shown here:

# create required chains

# ensure to create DOCKER-USER chain

# create also reject & allow chains

firewall-cmd --permanent --direct --add-chain ipv4 filter DOCKER-USER

firewall-cmd --permanent --direct --add-chain ipv4 filter DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-chain ipv4 filter DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-chain ipv6 filter DOCKER-USER

firewall-cmd --permanent --direct --add-chain ipv6 filter DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-chain ipv6 filter DOCKER-USER-DROP

# prepare log & drop chain

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DROP 64 -m hashlimit --hashlimit-mode srcip,dstip,dstport --hashlimit-name docker_drop --hashlimit-upto 6/min -j LOG --log-prefix "DOCKER_NET_DROP "

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DROP 128 -j DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DROP 64 -m hashlimit --hashlimit-mode srcip,dstip,dstport --hashlimit-name docker_drop --hashlimit-upto 6/min -j LOG --log-prefix "DOCKER_NET_DROP "

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DROP 128 -j DROP

# add redirects to internal chains

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER 4096 -o lo -j DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER 4096 -o eth+ -j DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER 4096 -o lo -j DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER 4096 -o eth+ -j DOCKER-USER-DENY-INTERNAL

# add default rules that deny traffic to all internal interfaces

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 10.0.0.0/8 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 127.0.0.0/8 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 169.254.0.0/16 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 172.16.0.0/12 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 192.168.0.0/16 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 224.0.0.0/4 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d fe80::/10 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d fc00::/7 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d ff00::/8 -j DOCKER-USER-DROPHaving create the rules you need to reload the ruleset using firewall-cmd --reload.

All rules will be created for ipv4 and ipv6. The following destinations will be dropped.

IPv4 | 10.0.0.0/8 | private range |

IPv4 | 127.0.0.0/8 | loopback addresses |

IPv4 | 169.254.0.0/16 | apipa network |

IPv4 | 172.16.0.0/12 | private range |

IPv4 | 192.168.0.0/16 | private range |

IPv4 | 224.0.0.0/4 | multicast net |

IPv6 | fe80::/10 | link-local |

IPv6 | fc00::/7 | unique local unicast (private networks) |

IPv6 | ff00::/8 | multicast |

The packet flow will be as follows.

- packet enters firewall

- depending on source interface

DOCKER-USERchain will be triggered - depending on the outgoing interface the

DOCKER-USER-DENY-INTERNALchain will be triggered - if a packet is targeting one of the destination networks, the

DOCKER-USER-DROPchain will be executed - otherwise the processing will return to

DOCKER-USERchain - packets in the

DOCKER-USER-DROPchain will be logged with a rate limit and dropped

As a result packets targeting the desired interfaces (lo & eth*) will be dropped when the destination is a private or non-routable destination.

Viewing rules

You can also view the rules using firewall-cmd.

[root@lab2-nuv-apphost1 ~]# firewall-cmd --direct --get-all-rules

ipv4 filter DOCKER-USER-DROP 64 -m hashlimit --hashlimit-mode srcip,dstip,dstport --hashlimit-name docker_drop --hashlimit-upto 6/min -j LOG --log-prefix 'DOCKER_NET_DROP '

ipv4 filter DOCKER-USER-DROP 128 -j DROP

ipv6 filter DOCKER-USER-DROP 64 -m hashlimit --hashlimit-mode srcip,dstip,dstport --hashlimit-name docker_drop --hashlimit-upto 6/min -j LOG --log-prefix 'DOCKER_NET_DROP '

ipv6 filter DOCKER-USER-DROP 128 -j DROP

ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 10.0.0.0/8 -j DOCKER-USER-DROP

ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 127.0.0.0/8 -j DOCKER-USER-DROP

ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 169.254.0.0/16 -j DOCKER-USER-DROP

ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 172.16.0.0/12 -j DOCKER-USER-DROP

ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 192.168.0.0/16 -j DOCKER-USER-DROP

ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 224.0.0.0/4 -j DOCKER-USER-DROP

ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d fe80::/10 -j DOCKER-USER-DROP

ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d fc00::/7 -j DOCKER-USER-DROP

ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d ff00::/8 -j DOCKER-USER-DROP

ipv4 filter DOCKER-USER 4096 -o lo -j DOCKER-USER-DENY-INTERNAL

ipv4 filter DOCKER-USER 4096 -o eth+ -j DOCKER-USER-DENY-INTERNAL

ipv6 filter DOCKER-USER 4096 -o lo -j DOCKER-USER-DENY-INTERNAL

ipv6 filter DOCKER-USER 4096 -o eth+ -j DOCKER-USER-DENY-INTERNALThis shows all current installed rules. Adding the --permanent flag to the command will show the persistent ruleset.

Retrieving droppet packets

Adding application workload or diagnosing communication issues can be hard if you cannot check a log for rejects. The ruleset above therefore implements logging (with rate limiting) to enable diagnosing dropped packets.

[root@lab2-nuv-apphost1 ~]# dmesg | grep DOCKER_NET_DROP | tail -n 3

[251476.576118] DOCKER_NET_DROP IN=br-77ff7aa6babd OUT=eth0 PHYSIN=veth3689612 MAC=02:42:ca:d5:c0:e1:02:42:ac:15:00:03:08:00 SRC=172.21.0.3 DST=10.57.17.6 LEN=84 TOS=0x00 PREC=0x00 TTL=63 ID=6358 DF PROTO=ICMP TYPE=8 CODE=0 ID=400 SEQ=1227

[251488.864632] DOCKER_NET_DROP IN=br-77ff7aa6babd OUT=eth0 PHYSIN=veth3689612 MAC=02:42:ca:d5:c0:e1:02:42:ac:15:00:03:08:00 SRC=172.21.0.3 DST=10.57.17.6 LEN=84 TOS=0x00 PREC=0x00 TTL=63 ID=12596 DF PROTO=ICMP TYPE=8 CODE=0 ID=400 SEQ=1239

[251501.152167] DOCKER_NET_DROP IN=br-77ff7aa6babd OUT=eth0 PHYSIN=veth3689612 MAC=02:42:ca:d5:c0:e1:02:42:ac:15:00:03:08:00 SRC=172.21.0.3 DST=10.57.17.6 LEN=84 TOS=0x00 PREC=0x00 TTL=63 ID=20392 DF PROTO=ICMP TYPE=8 CODE=0 ID=400 SEQ=1251 dmesg will print out all messages - just filter using our assigned prefix DOCKER_NET_DROP - and you'll get all dropped packets.

Log rate limiting

By default we're setting a rate limit of one log message every 10 seconds. Rate limit is enforced per srcip, dstip and dstport. The limit of 10s is chosen to reduce the number of buckets if a container is doing a portscan (which would in turn generate a bucket per destination port) while still having a moderate rate in case a service is frequently trying to access the same destination (like due an configuration mistake or yet not allowed service). Additionally having a log message every 10 seconds might help administrators to recognize that the rate limiter has been hit.

Granting communication for desired services

Obviously not every container is running without dependencies on local networks. Common use cases are databases which are not part of the container layer or authentication backends like an LDAP server. Therefore this guide takes this in consideration and provides a solution.

Allowing service communication according your requirements can be achieved by creating a rule that is processed prior to our deny rules. The default rules we've implemented above are using a priority of 4096 in the DOCKER-USER chain. Adding an allowed service is done by creating another rule on the DOCKER-USER chain with a priority less than 4096 and RETURN.

# adding an exception for a granted service destination

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER 32 -d 10.57.17.5 -j RETURNThis rule will grant any container to access the destination 10.57.17.5 (which is located on another host) without any further restriction.

How granular should exceptions be?

The recommendation is to allow communication to other hosts without limitation. According to zero-trust we always need to make sure that a compromised host cannot overtake the whole network. If you're communication between network zones this is even more true as the separation and limitation of services has to be enforced by the gateway firewall.

Why RETURN instead of ACCEPT?

You could - of course - use the ACCEPT instead of RETURN. This implies that iptables/nftables will skip processing the forward chain and pass the packet on. Technically this is even faster than using RETURN. Yet we don't want to bypass any (potentially important) rules that have been created by the docker daemon itself.

The packet flow in forward chain ist:

- DOCKER-USER chain

- DOCKER chain

If we're accepting a packet in DOCKER-USER chain we're also skipping the DOCKER chain. As our goal is to allow packets to pass (just as our limitation wouldn't exist at all) we don't fiddle with the docker internal processing rules by just returning to the flow as our limitation never existed.

Graceful implementation of request filtering

If you've set up a container host a while ago and would like to add this awesome network restriction right now, you might wonder if there's a way to add this without affecting already affective services.

Luckily - there's a way to achieve this.

- Add an audit only ruleset (see below)

- Watch the audit logs

- Add exceptions for services that require internal communication

- If all exceptions are in place: enforce ruleset

The whole ruleset with audit looks as follows.

# create required chains

# ensure to create DOCKER-USER chain

# create also reject & allow chains

firewall-cmd --permanent --direct --add-chain ipv4 filter DOCKER-USER

firewall-cmd --permanent --direct --add-chain ipv4 filter DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-chain ipv4 filter DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-chain ipv6 filter DOCKER-USER

firewall-cmd --permanent --direct --add-chain ipv6 filter DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-chain ipv6 filter DOCKER-USER-DROP

# prepare log & drop chain

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DROP 64 -m hashlimit --hashlimit-mode srcip,dstip,dstport --hashlimit-name docker_drop --hashlimit-upto 6/min -j LOG --log-prefix "DOCKER_NET_DROP "

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DROP 127 -j RETURN

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DROP 128 -j DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DROP 64 -m hashlimit --hashlimit-mode srcip,dstip,dstport --hashlimit-name docker_drop --hashlimit-upto 6/min -j LOG --log-prefix "DOCKER_NET_DROP "

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DROP 127 -j RETURN

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DROP 128 -j DROP

# add redirects to internal chains

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER 4096 -o lo -j DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER 4096 -o eth+ -j DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER 4096 -o lo -j DOCKER-USER-DENY-INTERNAL

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER 4096 -o eth+ -j DOCKER-USER-DENY-INTERNAL

# add default rules that deny traffic to all internal interfaces

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 10.0.0.0/8 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 127.0.0.0/8 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 169.254.0.0/16 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 172.16.0.0/12 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 192.168.0.0/16 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv4 filter DOCKER-USER-DENY-INTERNAL 64 -d 224.0.0.0/4 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d fe80::/10 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d fc00::/7 -j DOCKER-USER-DROP

firewall-cmd --permanent --direct --add-rule ipv6 filter DOCKER-USER-DENY-INTERNAL 64 -d ff00::/8 -j DOCKER-USER-DROPIn this case we've modified the DOCKER-USER-DROP chain to not DROP packets - we're just logging packets and returning back to the calling chain. This is done by injection of RETURN rules right before the we drop the packets (using priority 127).

Having this rules enabled, check out the logs as described above. If no more services need to be granted, remove the RETURN rules and enforce dropping unintended traffic.

# remove enforcement bypass

firewall-cmd --permanent --direct --remove-rule ipv4 filter DOCKER-USER-DROP 127 -j RETURN

firewall-cmd --permanent --direct --remove-rule ipv6 filter DOCKER-USER-DROP 127 -j RETURN

# apply to running configuration

firewall-cmd --reloadThat's it - yet another step for a better world.